I’m wondering if I use the intersection/junction/setNextTurn thingy correctly and I would like some guidance to help me either troubleshoot the issue or observe that pixy2 simply cant work for my project.

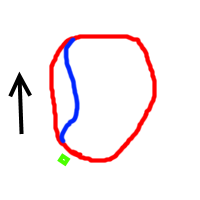

I am making a big line O (a simple 15m closed loop).

I use a simple two wheel drive system with the pixy2 between the two wheel at about 8~10cm from the ground tilted at about 60 degrees (ok. i would need to make precise measurements). The line pattern taped on the floor is 50mm of white, then 25mm of black, then again 50mm of white.

I then make timelapse video of the system and watch the vehicle go in circle for 8h straight.

=> it works all right.

Now i’m trying to introduce the concept of a junction

(in green the barcode on the floor)

The idea is to reproduce the system on pixy2 main website: use a barcode, then increment a counter, if the counter is even go left, otherwise go right. (+turn on/off two leds to show where it will go next)

I basically use

setNextTurn(-45) or setNextTurn(+45)

(i also tried +90/-90)

`source: https://docs.pixycam.com/wiki/doku.php?id=wiki:v2:line_api

setNextTurn(int16_t angle)

This function tells the line tracking algorithm which path it should take at the next intersection.

Turn angles are specified in degrees, with 0 being straight ahead, left being 90 and right being -90 (for example), although any valid angle value can be used. Valid angles are between -180 and 180.

setNextTurn() will remember the turn angle you give it, and execute it at the next intersection. The line tracking algorithm will then go back to the default turn angle for subsequent intersections.`

At the moment, given hardware constraints, when the pix2 is mounted, I can no longer access its USB port to see what the camera sees (I have ordered an L-shaped microUSB cable but it’s not delivered yet). I am blind while this cable has not been delivered.

I do see that the barcode reading works since the LED do change color at each even/uneven lap when it is seen by pixy.

However, sometimes it turns on the correct direction, sometimes not. I cannot identify a pattern.

Is the line pattern ok? should I have more “hard” junctions?(here they nearly start as two tangents.

Except waiting for that new cable to reach my place, what do you suggest to troubleshoot the situation?